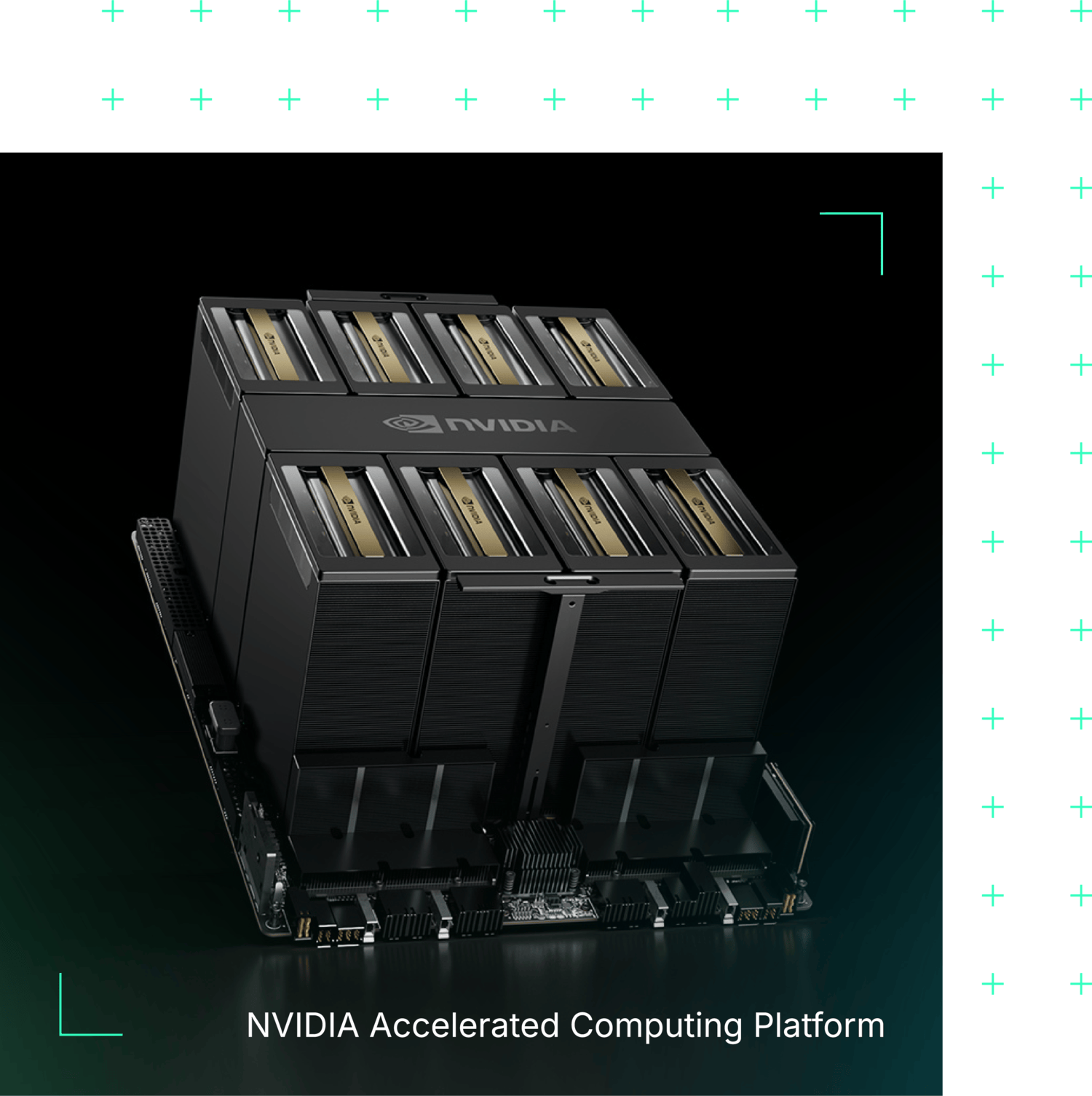

NVIDIA Accelerated Computing Platform

Hosted by Cirrascale

Get access to the NVIDIA accelerated computing platform hosted by Cirrascale, for HPC and AI training, tuning, and inference. Cirrascale delivers extremely fast interconnections and a fully accelerated software stack— so you can see the highest performance possible with the quickest time- to-value.